SEO in a Two Algorithm World: Pubcon Keynote by Rand Fishkin was originally published on BruceClay.com, home of expert search engine optimization tips.

Rand dedicates this presentation to Dana Lookadoo, who will always be with us.

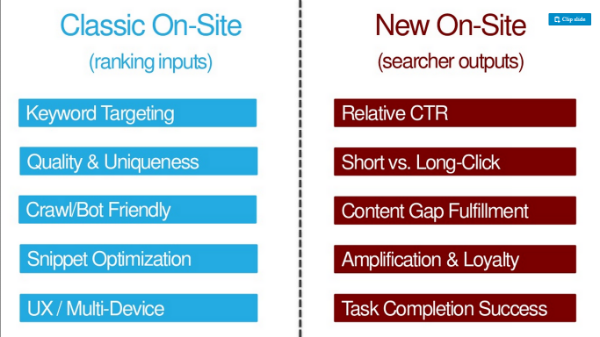

This author’s TL:DR take: On top of traditional SEO optimization factors (ranking inputs include keyword targeting, quality and uniqueness, crawl/bot friendly, snippet optimization, UX/multi-device optimization) SEO’s need to optimize for searcher outputs (CTR, long-clicks, content gap fulfilment, amplification and loyalty, task completion success).

Here’s where you can get the presentation: http://bit.ly/twoalgo

Remember when we only had one job? We had to make perfectly optimized pages. The search quality team would get it ranked and they used links as a major signal. By 2007, link spam was ubiquitous. Every SEO is obsessed with tower defense games because we love to optimize. Even in 2012, it felt like Google was making liars out of the white hat SEO world (-Wil Reynolds). Rand says today that statement isn’t true anymore. Authentic, great content is rewarded by Google better than they ever have. They’ve erased old school practices like combatting link spam. And they’ve leveraged feat and uncertainty of penalization to keep sites inline. It’s often so dangerous with disavows that many of us are killing links that provide value to our sites because we’re so afraid of penalties.

Google has also become good at figuring out intent. They look at language and not just keywords.

They predict diverse results.

They’ve figured out when we want freshness.

They segment navigational from informational queries. They connect entities to topics and keywords. Brands have become a form of entities. Bill Slawski has noted that Google mentions brands in a number of their filed patents.

Google is much more in line with their public statements. They mostly have policy that matches the best way to do search marketing today.

During these advances, Google’s search quality team underwent a revolution. Early on, Google rejected machine learning in their organic ranking algorithm. Google said that machine learning didn’t let them own, control and understand the factors in the algorithm. But more recently, Amit Singhal’s comments suggest some of that has changed. In 2012 Google published a paper of how they use machine learning to predict ad click-through rate. Google engineers called in their SmartASS system (apparently that’s ACTUALLY the name of the system!). By 2013, Matt Cutts at Pubcon talked about how Google could be using machine learning (ML) publically in organic.

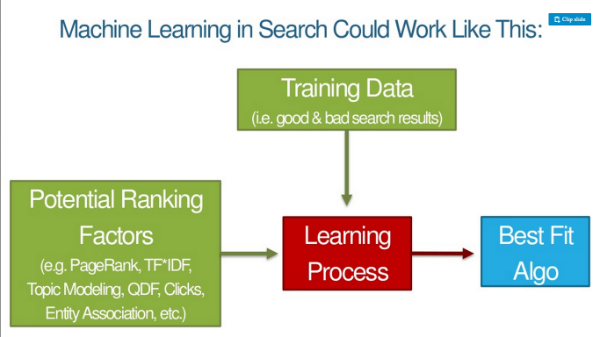

As ML takes over more of Google’s algo, the underpinnings of the ranking change. Google is public about how they use ML in image recognition and classification. They take factors they could use to classify images and then training data (things they tell the machine is a cat, dog, monkey, etc.) and there’s a learning process that gets them to a best-match algorithm. Then they can apply that pattern to live data all over.

Jeff Dean’s slides on Deep Learning is a must-read for SEOs. He says that this is essential reading and not too challenging to consume. Jeff Dean is a Google fellow and someone they like to make fun of a lot at Google. “The speed of light in a vacuum used to be about 35 miles per hour. Until Jeff Dean spent a weekend optimizing the physics.”

Bounce, clicks, dwell time – all these things are qualities that are in the machine learning process and the algo tries to emulate the good SERP experiences. We’re talking about an algorithm to build algorithms. Googlers don’t feed in ranking factors. The machine determines those themselves. The training data is good search results.

What does deep learning mean for SEO?

Googlers won’t know why something ranks or whether a variable is in the algo. Between the reader and Rand, does that sound like a lot of the things Googlers say now?

The query success metrics will be all that matters to machines:

- Long to short click ratio

- Relative CTR vs. other results

- Rate of searchers conducting additional related searches

- Sharing/amplification rate vs. other results

- Metrics of user engagement across the domain

- Metrics of user engagement on the page (how? Chrome and Android)

If lots of results on a SERP do all of the above, then they’ll keep including that. We’ll be optimizing more for searcher outputs. These are likely to be the criteria of on-site SEO’s future.

OK – but are these metrics affecting us today? In 2014 Moz did a Queries & Clicks test. Since then, it’s been much harder to move the needle with raw queries and clicks. Google is catching on to raw clicks and queries manipulations.

At SMX Advanced, Gary Illyes said that using clicks directly in rankings would not make too much sense with that noise. He said there were people producing noise in clicks, calling out Rand Fishkin. – Case closed! Or is it… ?

But what if we tried long clicks vs. short clicks? At 11:39 am on June 21st, Rand asked people to do a test where they quickly clicked back on result #1 and then clicked and dwelled on the #4 result. The #4 result stayed at SERP position 1 for about 12 hours. This tells us that searcher outputs effect rankings. (P.S. This is hard to replicate. Don’t do it because it’s dark magic.)

What you should be doing is things that will naturally make people want to click on your result in the SERP.

This is why Rand says we’re optimizing for two algorithms. We have to choose how we’re balancing our work. Hammer on signals of old? They still work. Links still work. Anchor text still moves the needle. But we can see on the horizon more clearly than ever before where Google is going.

Classic On-Site SEO (ranking inputs) vs. New On-Site SEO (searcher outputs)

Using these both matter because there are two algorithms.

Let’s talk about the 5 new elements of modern SEO.

- Punching above your average CTR

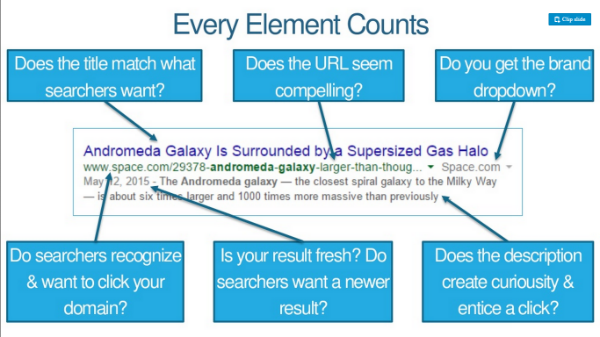

Optimizing the title, meta description and URL a little for keywords but a lot for clicks. If you rank #3 but you can boost your CTR, you can earn a boost in ranking. Every element counts. Do searchers recognize and want to click your domain? Does the URL seem compelling? Do you get a brand drop down?

Drive up CTR through branding or branded searches may give an extra boost. Branding (TV, radio, PPC) has an impact on CTR. Brand budget helps relative click-through rate and all sorts of other ranking signals and that lift is causing some part of this.

With Google Trends’ more accurate, customizable ranges, you can actually watch the effects of events and ads on search query volume. There’s a spike in “fitbit” queries after Fitbit has been running ads on NFL Sunday.

- Beat fellow SERP listings on engagement

Together, pogo-sticking and long clicks might determine a lot of where you rank (and for how long). What influences them. An SEO’s checklist for better engagement:

- Content that fulfills the searcher’s conscious and unconscious needs

- Speed, speed, and more speed

- Delivers the best UX on every browser

- Compels visitors to go deeper into your site

- Avoids features that annoy or dissuade visitors

New York Times has high-engagement graphics that asks visitors to draw their best guess finish of a graph.

- Filling gaps in visitor’s knowledge

Google’s looking for signals that show a page fulfills all a searcher’s needs. ML models may note that the presence of certain words, phrases and topics predict more successful searches. Rankings go to pages/sites that fill the gaps in searcher’s needs. Check out Alchemy API or MonkeyLearn. Run your content through them to see how it performs from an MLP perspective.

- Earning more shares, links and loyalty per visit

Data from Buzzsumo and Moz show that very few articles earn shares and links and these two have no correlation. People share a lot of stuff they never read. Google almost definitely classifies SERPs differently. A lot of shares on medical info won’t move the result up ranking; accuracy will be more important.

A new KPI: Shares and links per 1000 visits. Unique visits over shares + links.

Knowing what makes them return or prevents them from doing so is critical, too.

We don’t need better content, we need 10x content.

- Fulfilling the searcher’s task (not just their query)

Task = what they want to accomplish when they make that query. Google doesn’t want a multi-search path of continually focused queries. They want a broad search and they fill in all the steps and you complete your task.

They might use the clickstream data to help rank a site higher even if it doesn’t have traditional ranking signals. A page that answers the initial query may not be enough, especially if competitors do allow task completion.

Algo 1: Google

Algo 2: Subset of humanity that interacts with your content (in and out of search results)

“Make pages for people not engines” is terrible advice.

Engines need a lot of the things we’ve always done and we better keep doing that. People need additional things and we better do that too.

Bonus links:

No comments:

Post a Comment